EVO is launching a self-service online test portal for commercial advanced M&V tools (i.e. M&V tools/methods applicable to commercial buildings’ hourly consumption data). The portal is being licensed from Lawrence Berkeley National Lab, and we caught up with Eliot Crowe, Program Manager at Berkeley Lab.

EVO: Why develop a test portal for advanced M&V tools?

EC: There are a growing number of software tools offering advanced M&V capabilities for commercial applications, using interval data. Many use sophisticated modeling techniques, and some vendors want to keep their modeling methods proprietary. Until now, the target audience for these tools - utilities, for example - have had no way to compare the accuracy and quality of these tools. On the flipside, tool vendors have had no basis for objectively showing the quality of their tool compared to other alternatives.

EVO: What does the test involve?

EC: It’s a three-step process. First, the user downloads the test data set from the online portal, comprising hourly kWh and weather data for 367 buildings, and creates models for each building, covering a 12-month “training period.” Second, using a separate weather data file the M&V software tool is used to establish modeled kWh values for a separate 12-month “prediction period,” for all buildings in the data set - the user doesn’t get to see actual kWh values for this period. Finally, the user uploads their kWh predictions to the portal and receives their test scores. The test method was demonstrated by Berkeley Lab a few years ago, though the online portal uses an updated test dataset.

EVO: How are test scores established?

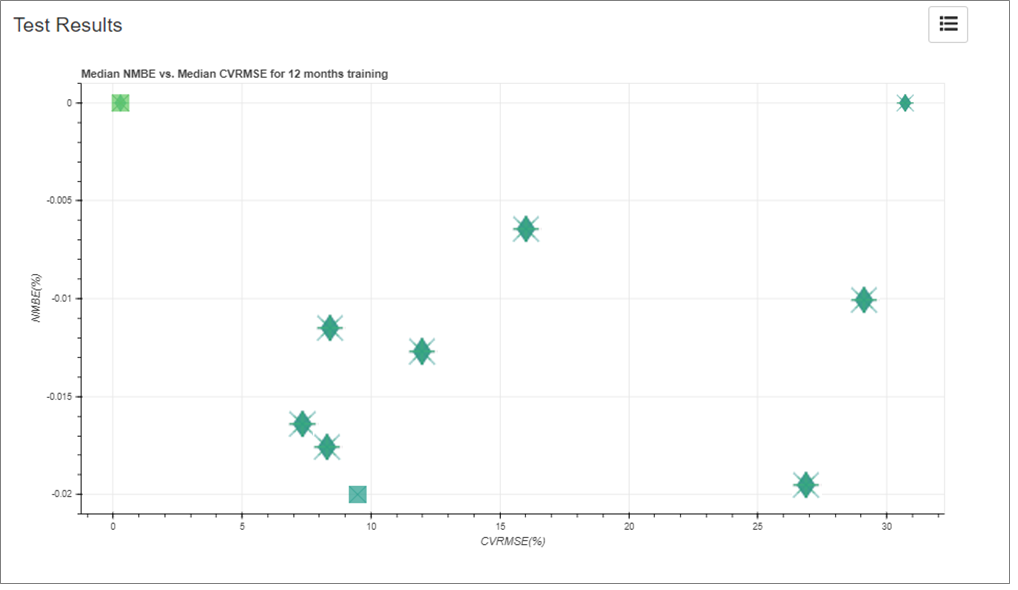

EC: Two metrics are used, normalized mean bias error (NMBE) and CV(RMSE). The users will see the median values for those metrics across all of the buildings in the test data set.

EVO: Those are model fitness metrics - so is the test measuring model fitness?

EC: Good question! Not quite - This is out-of-sample testing, so the training period models are developed based on a 12-month time period, but the test metrics are developed by comparing model-predicted values to actuals for a different 12-month time period. So the portal is assessing predictive accuracy rather than model fitness.

EVO: What does it take for an M&V tool to pass this test?

EC: This is more of a benchmarking method than a case of “pass” or “fail.” Test results are posted on the portal so that tools can be objectively compared, and over time we can establish rules of thumb on what constitutes a ‘good’ result. As a starting point Berkeley Lab has posted test results using its Time-of-Week and Temperature (TOWT) model, and we are encouraging others to join us! While test results are posted on the portal, users have the option to anonymize the tool name.

EVO: So if a utility sees that an M&V tool ranks high using this test portal it has peace of mind that it will produce accurate results?

EC: If only life were that simple! To make an analogy, a good test result is like saying you have a good hammer, but like all tools you can use it well or use it badly! Having an objective way to compare tools using the M&V test portal is a big step forward, and will hopefully give peace of mind to those wanting to adopt advanced M&V methods. But it doesn’t negate the need to have sound policies and procedures in place to ensure the tool is applied sensibly - luckily there is a growing body of guidance on that topic.

To find out more about the new M&V tool test portal, sign up for EVO’s webinar on April 30. The EVO M&V Tool Test Portal link will be posted after the webinar.

Example chart from EVO’s M&V tool testing portal, display test results to allow for objective comparison of model accuracy